| Uploader: | Ahmet-Muner |

| Date Added: | 23.01.2018 |

| File Size: | 68.33 Mb |

| Operating Systems: | Windows NT/2000/XP/2003/2003/7/8/10 MacOS 10/X |

| Downloads: | 50209 |

| Price: | Free* [*Free Regsitration Required] |

Use python to download files from websites | Cron-Dev

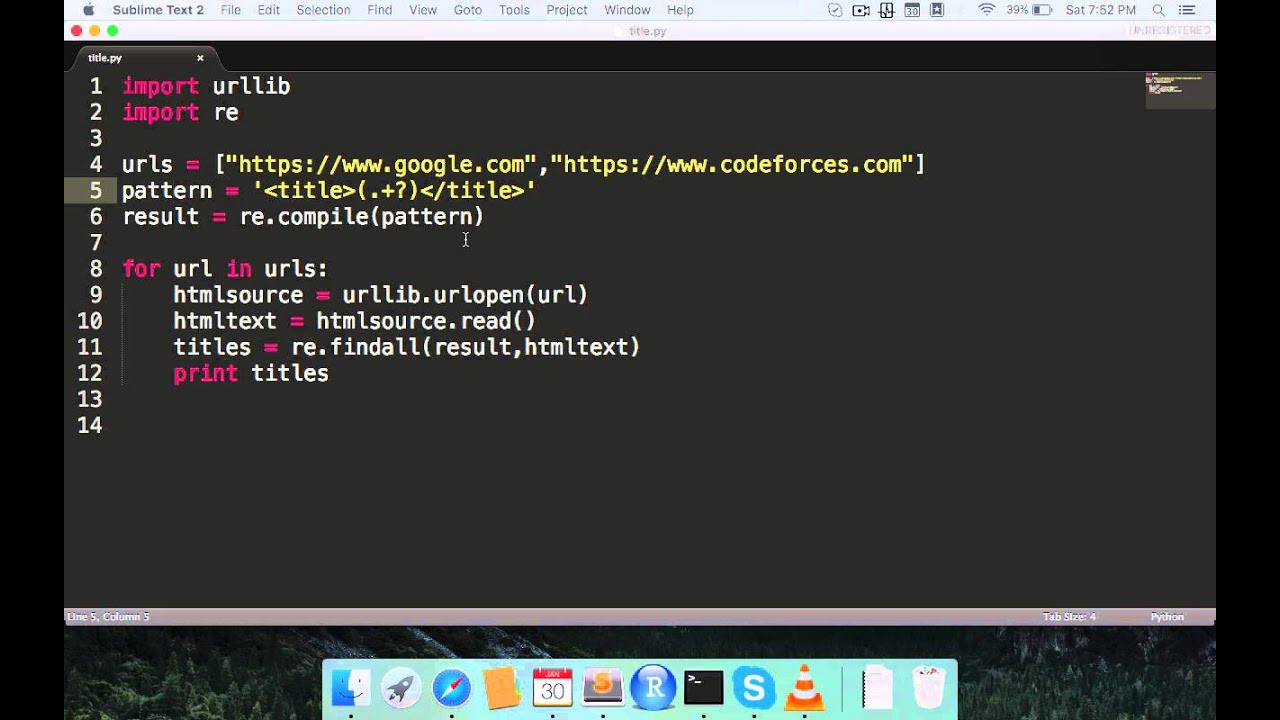

This lesson introduces Uniform Resource Locators (URLs) and explains how to use Python to download and save the contents of a web page to your local hard drive. About URLs. A web page is a file that is stored on another computer, a machine known as a web server. Jun 15, · Hello everyone, I would like to share with everyone different ways to use python to download files on a website. Usually files are returned by clicking on links but sometimes there may be embedded files as well, for instance an image or PDF embedded into a web page. I was wondering if it was possible to write a script that could programmatically go throughout a webpage and download blogger.com file links automatically. Before I start attempting on my own, I want to Python/Java script to download blogger.com files from a website This is called web scraping. For Python, there's various packages to help.

Python download file from website

By using our site, you acknowledge that you have read and understand our Cookie PolicyPrivacy Policyand our Terms of Service. Stack Overflow for Teams is a private, secure spot for you and your coworkers to find and share information. I use wget inside a Windows. I would prefer to python download file from website the entire utility written in Python though.

I struggled though to find a way to actually down load the file in Python, thus why I resorted to wget. This is the most basic way to use the library, minus any error handling. You can also do more complex stuff such as changing headers. The documentation can be found here. One more, using urlretrieve :. Inuse the python requests library. Requests has many advantages over the alternatives because the API is much simpler. This is especially true if you have to do authentication.

People have expressed admiration for the progress bar. It's cool, sure. There are several off-the-shelf solutions now, including tqdm :, python download file from website.

The wb in open 'test. Wrote wget library in pure Python python download file from website for this purpose. It is pumped up urlretrieve with these features as of version 2. I agree with Corey, urllib2 is more complete than urllib and should likely be the module used if you want to do more complex things, but to make the answers more complete, urllib is a simpler module if you want just python download file from website basics:, python download file from website.

Will work fine. Or, if you don't want to deal with the "response" object you can call read directly:. In python3 you can use urllib3 and shutil libraires. Download them by using pip or pip3 Depending whether python3 is default or not. This is pretty powerful. It can download files in parallel, retry upon failureand it can even download files on a remote machine.

If speed matters to you, I made a small performance test for the modules urllib and wgetand regarding wget I tried once with status bar and once without. I took three different MB files to test with different files- to eliminate the chance that there is some caching going on under the hood.

Tested on debian machine, with python2. Just for the sake of completeness, it is also possible to call any program for retrieving files using the subprocess package. Programs dedicated to retrieving files are more powerful than Python functions like urlretrieve. For example, wget can download directories recursively -Rcan deal with FTP, redirects, HTTP proxies, can avoid re-downloading existing files -ncand aria2 can do multi-connection downloads which can potentially speed up your downloads.

This may be a little late, But I saw pabloG's code and couldn't help adding a os. Check it out :. If running in an environment other than Windows, you will have to use something other then 'cls'.

I have fetched data for couple sites, including text and images, the above two probably solve most of the tasks. As it is included in Python 3 standard library, your code could run on any machine that run Python download file from website 3 without pre-installing site-package.

I have tried only requests and urllib modules, the other module may provide something better, but this is the one I used to solve most of the problems. Learn more. Ask Question. Asked 11 years, 6 months ago, python download file from website.

Active 7 days ago. Viewed 1. So, how do I download the file using Python? Owen Owen Many of the answers below are not a satisfactory replacement for wget. Among other things, wget 1 preserves timestamps 2 auto-determines filename from url, appending.

If you want any of those, you have to implement them yourself in Python, but it's simpler to just invoke wget from Python. Short solution for Python 3: import urllib. In Python 2, use urllib2 which comes with the standard library. Deep LF 12 12 bronze badges. Corey Corey This won't work if there are spaces in the url you provide. In that case, you'll need to parse the url and urlencode the path. Here is the Python 3 solution: stackoverflow. Just for reference.

The way to urlencode the path is urllib2. This does not work on windows with larger files. You need to read all blocks! One python download file from website, using urlretrieve : import urllib urllib. Richard Dunn 2, 1 1 gold badge 10 10 silver badges 26 26 bronze badges. PabloG PabloG Oddly enough, this worked for me on Windows when the urllib2 method wouldn't. The urllib2 method worked on Mac, though.

Also on windows you need to open the output file as "wb" if it isn't a text file. Mark A. Ropper 2 2 silver badges 11 11 bronze badges. How does this handle large files, does everything get stored into memory or can this be written to a file without large memory requirement? Why would a url library need to have a file unzip facility? Read the file from the url, save it and then unzip it in whatever way floats your boat. Also a zip file is not a 'folder' like it shows in windows, Its a file.

Ali: r. Returned as unicode. Returned as bytes. Read about it here: docs. Matthew Strawbridge Grant Grant The disadvantage of this solution is, that the entire file is loaded into ram before saved to disk, just something to keep in mind if using this for large files on a small system like a router with limited ram.

To avoid reading the whole file into memory, try passing an argument to file. See: gist. Principe Nov 16 '16 at Use shutil. Python 3 urllib. Very nice answer for python3, see also docs. EdouardThiel If you click on urllib. Sara Santana Sara Santana 8 8 silver badges 19 19 bronze badges. Steve Barnes Stan Stan 2 2 silver badges 7 7 bronze badges.

I would remove the parentheses from the first line, because it is not too old feature. Akif Akif 2, python download file from website, 23 23 silver badges 29 29 bronze badges. No option to save with custom filename?

The progress bar does not appear when I use this module under Cygwin, python download file from website. You should change from -o to -O to avoid confusion, as it is in GNU wget. Or at least both options should be valid. The -o already behaves differently - it is compatible with curl this way. Would a note in documentation help to resolve the issue?

Or it is the essential feature for an utility with such name to be command line compatible? Following are the most commonly used calls for downloading files in python: urllib. Jaydev Jaydev 1, 16 16 silver badges 34 34 bronze badges.

Download them by using pip or pip3 Depending whether python3 is default or not pip3 install urllib3 shutil Then run this code import urllib.

Python for Automation #2: Download a File from Internet with Python

, time: 8:18Python download file from website

Learn how to download files from the web using Python modules like requests, urllib, and wget. We used many techniques and download from multiple sources. Now let’s create a code using a coroutine to download files from the web. This lesson introduces Uniform Resource Locators (URLs) and explains how to use Python to download and save the contents of a web page to your local hard drive. About URLs. A web page is a file that is stored on another computer, a machine known as a web server. I was wondering if it was possible to write a script that could programmatically go throughout a webpage and download blogger.com file links automatically. Before I start attempting on my own, I want to Python/Java script to download blogger.com files from a website This is called web scraping. For Python, there's various packages to help.

No comments:

Post a Comment